Office 365 is the cloud service with a major adoption. One part of this is getting the on-premises Exchange-servers to be removed, and instead leveraging the Outlook Online provided service. The typically increase in allowed mailbox size is a big selling point, but additional benefits are added every day.

Migration of PST

The increased mailbox size does start the discussion of howto eliminate all PST-files spread among all the local client harddrives and the file-servers in an organization. Microsoft has offered the PST Capture tool (scan all devices, locate all PSTs and import them), and as of last year (2015) the Import PST Files to Office 365 was a way to allow sysadmins to perform a more controlled (batch) upload of files. As always, the end-users can migrate the data via Outlook.

None of these ways are “great”. The PST Capture Tool has a great process of collecting files and dumping them, but its essentially a gather tool that will without any intelligence of what the user wants and then dump anything it can find into a mailbox.

The newly arrived Import PST file-service is a batch-management tool that seems to target the admins that has a bunch of files at a single time (or potentially a few times) to upload. Options are either to upload this into an Office 365 managed Azure Storage Space or simply ship a hard-drive with a collection of files.

There are a few people who have explained that the Office 365 Import service has a powershell interface, unfortunately its not documented and Microsoft support does not acknowledge that it exists.

In addition to the the above options provided by Microsoft there are a few third-party options – such as MessageOps.

End-user driven migration

To make something that is end-user friendly a bit of automation is required of the above tools. Currently a dropbox (a folder where users can dump the PST-files) has been designed, however inorder to get that working there quite a few hurdles that someone has to overcome inorder to produce any type of favourable result. The below notes are for my own memory…

Office 365 Import Service

As the Office 365 Import Service is a Microsoft supported tool it was it was considered the most reliable option of all of the above. Requiring Outlook for end-users to migrate the data seemed to be a high-cost and not very friendly solution. The PST Capture tool were most likely to migrate data which wasn’t relevant to the user, and the risk was of course that something was missed in the process. Third-party options inccured additional licensing cost (ontop of any Office 365 licensing) and was therefore discarded early in the process.

Account requirements

To initiate anything for the Office 365 PST Import service you are required to have certain permissions, aswell as only leveraging simple authentication if there is an intent to automate the process. If any type of multifactor authentication is enabled the ability to connect to the Office 365 via Powershell session is disabled.

Permissions

A service account has to be setup that has the role Mailbox Import Export assigned to it. This isn’t directly granted any set of permissions so its recommended to create a new group, assign the role to it and make the service-account a member of the group.

It also seems that to be able to access the Office 365 PST Import Service from the portal one has to be a Global Admin aswell. Powershell cmdlets are only available once the Role Mailbox Import Export has been assigned.

Storage (Azure)

Office 365 Import Service offers to setup a Azure Storage Space for the tenant, and will provide the Shared Access Signature required to upload files (if using the network upload) or a storage key if using the option to shipping a harddrive. To leverage any kind of automation the only accessible path for the Office 365 PST Import service is the blob located on an Azure Storage Space. It seems that sometime in March (2016) the previous method of using the storage key to generate a Shared Access Signature (SAS) to allow for read-operations for the Import Service (technically this is performed by the Mailbox Replication Service provided by Office 365) was discontinued. One can find a storage key for the option to send in a hard-drive, however that seems to not leverage the same upload space as the network upload and therefore the storage key can’t be used.

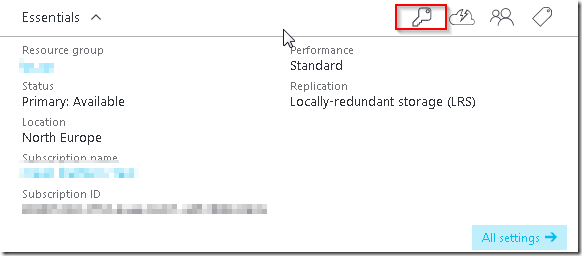

Fortunately enough the option is only requiring an Azure Storage Space which can be provided via a normal Azure-subscription. Setup a Blob in an Azure Storage Space, and immediately you have access to the storage space. Once the Storage Space is setup you can retrieve the storage key by locating the key-icon

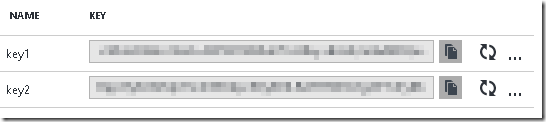

There will be two keys which you can leverage.

To generate a Shared Access Signature (needed for the automation part) you can download the Azure Storage Explorer 6. The tool allows a quick and easy way to view whats in a Blob on the Storage Space, aswell as generating the SAS-key.

Once you start the tool choose to add your storage space with the storage key above. Remember to check HTTPS.

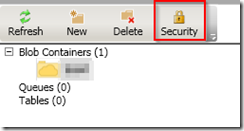

Once you have connected to your Storage Space, choose to create a new Blob (with no anonymous access). Once this is created you can press Security to start generating the SAS-key.

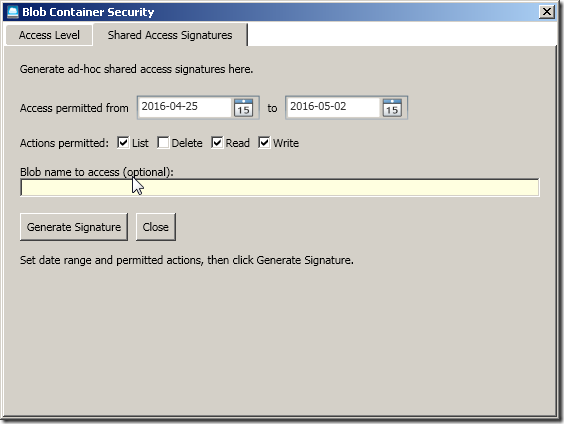

Generate the signature by selecting a start-date (keep track of what timezone you are in) and the end-date. These dates will set the validity for the period of your SAS-key. Remember to define the actions you want to allow. To upload files you need to allow write, and to use the SAS-key for importing the files you need to allow read. There is the possibility to generate multiple SAS-keys and use them for different parts of the process.

A SAS-key are built of multiple parts – here comes a brief explanation;

#sv = storage services version; 2014-02-14 #sr = storage resource; b (blob), c (container) #sig = signature #st = start time; 2016-02-01T13%3A30%3A00Z #se = expiration time; 2016-02-09T13%3A30%3A00Z #sp = permissions; rw (read,write)

Sample:

?sv=2012-02-12&se=9999-12-31T23%3A59%3A59Z&sr=c&si=IngestionSasForAzCopy201601121920498117&sig=Vt5S4hVzlzMcBkuH8bH711atBffdrOS72TlV1mNdORg%3D

Copy files to Azure Storage Space

There are multiple ways to copy files to any Azure Storage Space. You can use the Azure Storage Explorer 6 that was used to generate the SAS-key. Someone has provided a GUI for AZCopy command-line tool, but for automation the command-line usage for AZCopy is the route to go. Microsoft has written an excellent guide for this which doesn’t need any additional information.

Connecting to Office 365

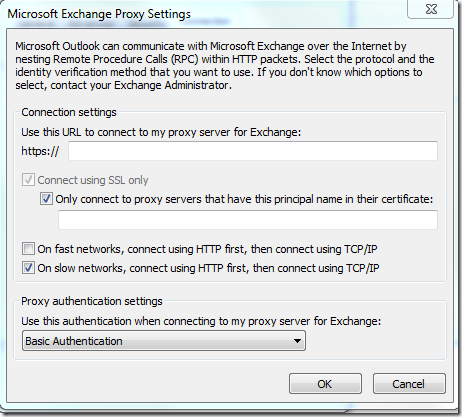

Managing Office 365 for any type of automated manage is performed via a PSSession (PowerShell Session). A PSSession will import all available cmdlets from Office 365. As you can imagine quite a few are similar to Exchange, and it may therefore provide some overlap. To avoid confusion the recommended approach is to append a prefix for all cmdlets from the session which can be defined when the session is imported. This is a sample script that will provide the username and password that is required to connect to, configure the proxy-options for the Powershell session and setup the session with O365.

$password = ConvertTo-SecureString "password" -AsPlainText -Force $userid = "name-admin@company.onmicrosoft.com" $cred = New-Object System.Management.Automation.PSCredential $userid,$password $proxyOptions = New-PSSessionOption -ProxyAccessType IEConfig -ProxyAuthentication Negotiate -OperationTimeout 360000 $global:session365 = New-PSSession -configurationname Microsoft.Exchange -connectionuri https://ps.outlook.com/powershell/ -credential $cred -authentication Basic -AllowRedirection -SessionOption $proxyOptions Import-PSSession $global:session365 -Prefix O365

Once the session is started the modules are imported with the prefix O365, as an example commands go from:

Get-Mailbox

to

Get-O365Mailbox

Using the Import-service via Powershell

As noticed the Office 365 Import service is a GUI only approach that is not supported for automation. That beeing said there are options to start this via Powershell. Multiple blog-posts are documenting the New-MailboxImportRequest cmdlet (and with the prefix its now: New-O365MailboxImportRequest), however Microsoft support will barely acknowledge its existance.

As long as you have the previous stated account permissions assigned (Mailbox Import Export Role) the cmdlet will be available and can be used.

For Office 365 the only supported source is an Azure Storage Space. The import-service is creating one for you, however today (2016-05-12) we are unable to create the Shared Access Signature to allow the automation part use that Storage Space. January 2016 this doesn’t seem to be the case and therefore we can assume that potentially this will change in the future.

Below command-line will allow you to start an import. If you receive the error 404 most likely there is an bad path to the file, and a result of 403 most likely is a bad SAS-key.

Remember: The O365 is the prefix we choose to use when running Import-PSSession. The actual cmdlet is New-MailboxImportRequest

New-o365MailboxImportRequest -Mailbox user@mailbox.com -AzureBlobStorageAccountUri https://yourstorage.blob.core.windows.net/folder/User/test.pst -BadItemLimit unlimited -AcceptLargeDataLoss –AzureSharedAccessSignatureToken “?sv=2012-02-12&se=9999-12-31T23%3A59%3A59Z&sr=c&si=IngestionSasForAzCopy201601121920498117&sig=Vt5S4hVzlzMcBkuH8bH711atBffdrOS72TlV1mNdORg%3D" -TargetRootFolder Nameoffolderinmailbox

Retrieving statistics

Once the import is started it fires off and actually goes through pretty quickly. As you can imagine the results can be retrieved by using Get-O365MailboxImportRequest and Get-O365MailboxImportRequestStatistics. One oddity was that the pipe of passing on Get-O365MailboxImportRequest to the Get-O365MailboxImportRequestStatistics didn’t work as expected. Apparently the required identifier is named Identity and it actually requests the RequestGuid.

Sample loop;

$mbxreqs = Get-O365MailboxImportRequest

foreach ($mbx in $mbxreqs) {

$mbxstat = Get-O365MailboxImportRequestStatistics -Identity $mbx.RequestGuid

$mbxstat | Select-Object TargetAlias,Name,targetrootfolder, estimatedtransfersize,status, azureblobstorageaccounturi,StartTimeStamp,CompletionTimeStamp,FailureTimeStamp, identity

}

The above data are things which was useful for a brief overview. Sometimes you can manage with the Get-O365MailboxImportRequest.

Cleanup of Azure Storage Space

What does not happen automatically (well, nothing in this process happens automatically) is the removal of the PST-files uploaded to the Azure Storage Space. Having the users PST-files located in a Storage Space will consume resources (and money), aswell as the user might be a bit uncomfortable about it. As always the attempt is to automate this process. To retrieve the cmdlets for managing the Azure Storage Space (remember, multiple ways to handle this. AZCopy is a single-purpose tool) you need to download Azure Powershell. Microsoft again has an excellent guide howto get started. What would be even faster is if all these services could provide a common approach of management. For Office 365 you import a session, but for Azure you download and install cmdlets?

Once the Azure Powershell cmdlets are installed you can easily create a cleanup job that will delete any file older than 15 days. First a time is defined. Secondaly we setup a connection to the Azure Storage Space (New-AzureStorageContext), and then we retrieve all files in our specific blob, filter based on our time-limit and then start removing them.

Good to know: Remove-AzureStorageBlob does accept –Whatif. However, –Whatif will still execute the remove. Test your code carefully… Most likely this is true for many other cmdlets.

[datetime]$limit = (Get-Date).AddDays(-15)

$context = New-AzureStorageContext -StorageAccountName $straccountname -StorageAccountKey $straccountkey -ErrorAction Stop

Get-AzureStorageBlob -Container $strblob -blob *.pst -Context $context | Where-Object { $_.LastModified -lt $limit } | ForEach-Object {Remove-AzureStorageBlob -Blob $_.Name -Container $strblob -Context $context}

Summary

A long rant that haven’t given anything to you. To be honest – this is memory notes for myself. The parts that are involved in creating an automated workflow requires a lot of moving bits and pieces that utilizes what a common-man would define as the cloud. The cloud is several messy parts that aren’t polished, not well documented, always in preview (technical preview, beta, early release, not launched..) and constantly changing.

All of the above are things that provided a bit of struggle. Most likely the struggle is due to lack of insight into a few of the technologies, and as more insight was gained the right questions were asked. If you read all of the links above carefully you will most likely see a few comments from me.