After going down a rabbit hole attempting to understand the process of why ConfigMgr clients fail. Fail to install, fail to keep themselves running, failure to repair themselves – there has been a realization that a lot of people have spent an insane amount of time doing the same thing. Oddly enough, very few people seem to understand the implications of doing things one way or another.

Hopefully this can be a summary post that will detail the implications of choosing different paths when installing the ConfigMgr client.

Prerequisites

There is an extensive documentation of what the technical requirements are of the platform you intend to install the ConfigMgr client on. Most likely this will not be any major issue as the majority of these will be fulfilled on any supported platform and if they are not available prerequisite will be installed as part of the very stable CCMSetup.exe.

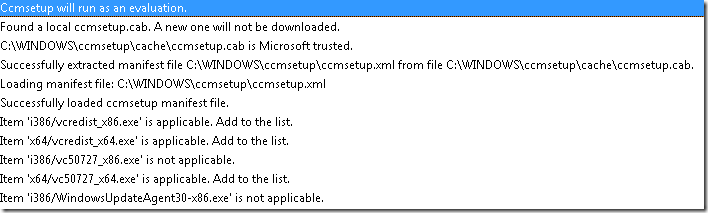

ConfigMgr setup (called ccmsetup.exe) is a wrapper executable that will leverage the contents of ccmsetup.cab. The .CAB-file contains a XML file that will detail all prerequisite checks that should be performed on a client and attempt to verify that they are installed, and if not download them (from a source-repository defined in many ways – if none is specified the folder which ccmsetup is started from will be the source) and install them.

Can this cause issues? Yes. If the folder where ccmsetup.exe is called from does not contain the prerequisites specified in ccmsetup.cab so they actually can’t be downloaded or the alternative source-folders specified doesn’t contain them the installation will fail. Or worse, if the requirements are invalid this will also cause issues. An invalid hash for SCEPInstall.exe was the culprit of an update released by Microsoft or the publically acknowledged MicrosoftPolicyPlatformSetup.msi invalid signature has also been the cause of headaches.

So lets clarify;

Don’t remove things from the ConfigMgr client source-folder.

Installation

Interaction

When ccmsetup.exe is started it is a non-interactive process which only allows anyone to review the progress by reading log-files. For the impatient that hopes to have a progress-bar running – there is none. (log files located in c:\windows\ccmsetup\logs)

CCMSetup.exe has some intelligence to avoid have someone firing of the non-interactive process and fail to properly install the ConfigMgr client. It uses the Active Directory (in multiple ways) to identify most of the settings that are required, however of course there are many optional and beneficial options.

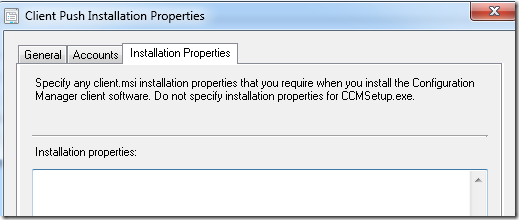

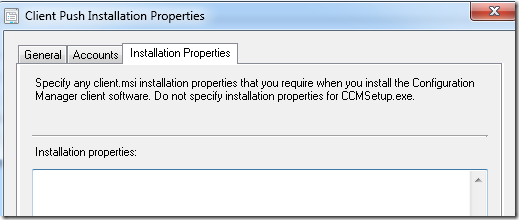

Install Properties

There is a small rule when it comes to passing parameters to ccmsetup.exe

Parameters that start with a / is dedicated for the ccmsetup.exe-wrapper itself, whereas the parameters without (and usually written in capital letters) are dedicated for the MSI and used by the installation process. Parameters meant for the ccmsetup.exe (with /) should be written first and before all parameters meant for the client MSI.

So, the client will do the following to identify how it should be installed:

Parse commandline

Ccmsetup command line: "C:\WINDOWS\ccmsetup\ccmsetup.exe" /runservice "SMSCONFIGSOURCE=P" "smssitecode=P01"

Read from registry

Command line parameters for ccmsetup have been specified. No registry lookup for command line parameters is required.

Query Active Directory

Performing AD query: '(&(ObjectCategory=mSSMSManagementPoint)(mSSMSDefaultMP=TRUE)(mSSMSSiteCode=P01))'

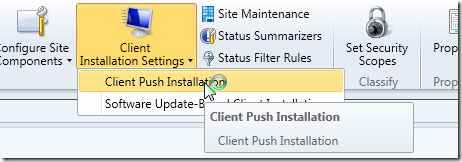

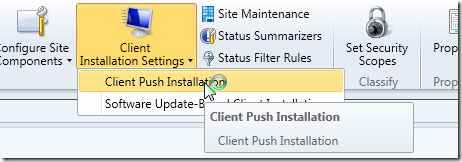

Parameters published within Active Directory is defined from the ConfigMgr environment when modifying the Site-settings

So, as long as you have a fairly uncomplex environment of ConfigMgr the installation of the ConfigMgr client will be simple and you can pass the installation files to anyone without a lot of instructions. Just double-click this file (ccmsetup.exe) and the settings will be retrieved from Active Directory, at minimum.

Download of installation-files

If there are multiple offices and perhaps even some limited bandwidth scenarios with high-latency a quick question comes to mind – how can we optimize this?

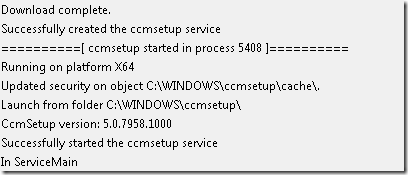

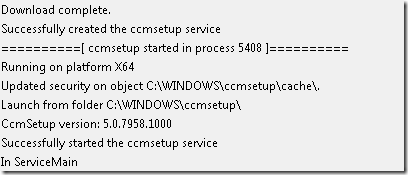

As previously noted the most common scenario is that the client itself and the prerequisites will download themselves from the folder they were started into a local-cache folder named ccmsetup. Technically, the ccmsetup will just perform enough actions to get this download started and then handover to the local process that will continue to download the of remaining prerequisites and client-files as illustrated by the log-file.

Once the local file exists (along with ccmsetup.cab) the real (now local) installation-process will continue the installation. This will continue to download the remaining bits and pieces from either the source-folder or an alternative source-folder:

/source:<Path>

There can be multiple source-folders specified and before it attempts to use one the ccmsetup.exe will verify that the source-folder can be reached – if not it will invalidate it.

Technically, the commandline can look like this

ccmsetup.exe /source:\\validfileshare\client<em> </em>/source:<em>\\invalidfileshare\client </em>/source:<em>\\validfileshare2\client

The folder used will be based on availability (must be reachable) and the order in which they were listed. There is no intelligence that calculates latency or bandwidth, the only check performed is if the files are reachable. However, if you want that to be used you can leverage the ConfigMgr infrastructure using the property /mp.

/mp:<em><Computer>

MP stands for Management Point and this specific property will only define what Management Point CCMSETUP will contact to retrieve the client source-files. The property will not be used by the ConfigMgr client itself (you can use a different property for this). The Management Point will leverage the ConfigMgr package created during its setup (called Configuration Manager Client Package) and all of the ConfigMgr infrastructure. Based on the boundaries defined and what Distribution Point will serve those boundaries it will download the package from the best local (read Fast boundary) Distribution Point, and if none are available it will fallback to a remote one (read slow).

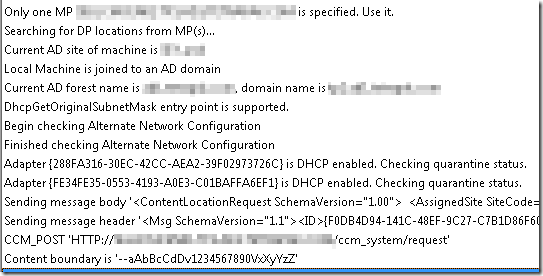

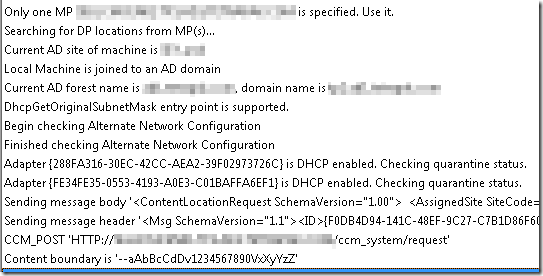

Sample review of the earlier stages of an CCMSetup running where it is contacting the Management Point for content download.

The download from a Distribution Point will leverage BITS and the priority of that download can be specified using the BITS priority property.

/BITSPriority:<em><Priority>

Patches

ConfigMgr is a living product and therefore there are lots of released updates for it. ConfigMgr 2007 had a set of specific solutions (hotfixes) released that included updates to the client and every once in a while these were gathered up in a Service Pack or Release Pack (SP or R) which provided a brand new MSI-file.ConfigMgr 2012 still has the concept of Service Pack and Release Pack, however very few hotfixes have been released in between these releases and instead they are release in cumulative packs that will provide a new MSP-file with all the fixes from backwards.

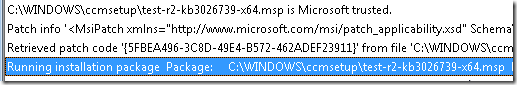

Great improvement that ConfigMgr 2012 has a single Microsoft Patch to be installed. Of course there is a desire to ensure that the clients receives these fixes as soon as possible and if possible to ensure that at installation-time the patch is applied. This is especially important during Operating System Deployment as the fixes may resolve issues during the Operating System Deployment process.

Patches introduces the first challenge when it comes to ensuring that a client will install properly.

Supported method

Microsoft has recommended the approach of using the generic Windows Installer-property PATCH. This has been around as a recommended method since SMS 2003, and continue to be the official recommended way forward.

There are a few caveats to using the PATCH property and that is namely the limitation of referencing any type of file there.

File path has to be an absolute path and no variables can be used when the command-line is parsed by Windows Installer. If the file referenced does not have the extension MSP it will be ignored. In essence: You have to write the specific path where the file is located in plain old english (or.. well..)

Microsoft has therefore provided great blog-articles (no technet-article as the property is a generic Windows Installer property) on howto leverage this for the published Client Installer Properties and multiple ways of using this property during the Operating System Deployment process.

Applying a ConfigMgr Hotfix During the Client Installation of an OS Deployment

Patch ConfigMgr 2012 x86 and x64 clients during a task sequence using the PATCH property

Deploying ConfigMgr Hotfixes

There is one key take away that is worth noting and that is the fact that using the PATCH property will install all the bits of the new patch during the initial installation of the client and once the installation is completed the client will be at the latest build of the client. If applying the patch post-installation it is required to restart the ConfigMgr client (we want to release any locks to a file) before we have actually applied the update.

Primary concept here are based into a few parts that will provide us with a few different ways of populating the PATCH property depending on when and how we install the MSP.

Install on existing Windows machines

When using the published installer properties (used for client push scenarios, manually triggered installs without any overriding options) we will reference the patch using a UNC-path. The UNC-path can reference any file-share that the computer account has access to. Sample PATCH-property

PATCH=\\Siteserver\SMS_CNT\client\x64\hotfix\KB2905002\Configmgr2012ac-r2-kb2905002-x64.msp

Using a UNC-path of course is a hard-coded reference to a specific file-share (and using DFS you can of course offer some abstraction here), however there is a concern to be raised with this method.

Referencing a UNC-path would of course require that the client has access to the MSP-file on the occasion that a future repair would happen or during the installation. If the file would not be reachable (move, network disconnect, permissions improperly configured) the installer with exit with the generic exit code of 1635. Even worse though is the complete failure of the installation due to a high-latency / low bandwidth scenario where the file-copy operation just fails. Sample error code in such a scenario:

MSI: Action 1:44:18: InstallFiles. Copying new files ccmsetup 2015-08-02 01:44:18 8560 (0x2170)

MSI: Internal Error 2902. ixfAssemblyCopy ccmsetup 2015-08-02 01:44:20 8560 (0x2170)

MSI: Action 1:44:21: Rollback. Rolling back action: ccmsetup 2015-08-02 01:44:21 8560 (0x2170)

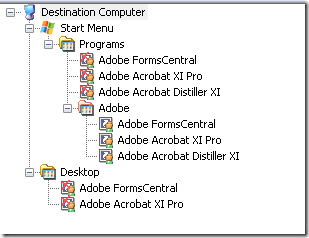

Install during Operating System Deployment

During OSD there seems to be two ways to reference the patches. The previous (circa 2007 and a bit of the life-span of 2012 in) way was to reference the patches in their respective packages when downloaded by ConfigMgr during the OSD-process. A sample path has been expressed in the first article. The PATCH would reference a folder, temporarily, created by a specific package and could install the patch with local access.

PATCH="C:\_SMSTaskSequence\OSD\XXX00002\x64\hotfix\KB2905002\Configmgr2012ac-r2-kb2905002-x64.msp"

During later articles there is a new recommended method of running a pre-step to copy the file into a local-folder (c:\windows\temp anyone?) and then referencing this folder instead

PATCH=C:\windows\temp\SCCMHotfix\configmgr2012ac-r2-kb2910552.msp

Summary

Briefly looking over these methods we have a few things worth noting;

1. We do not have the ability to leverage a single way of referencing these patches if we were to follow Microsoft practices. UNC-paths for one method and a local-copy for a different method?

2. We can not leverage the ConfigMgr infrastructure when downloading patches since we are forced to reference them using a hardcoded path during a manual install / push-scenario

3. Patch installation from a UNC-path may be high-risk as any loss of network connectivity or if the connection is less than perfect may result in a complete failure of the client install. Preferred method (which is most likely this is the new recommended approach for OSD) would be to download a copy to the local harddrive on the client.

4. There is no dynamic way of placing the patches in a single location and have the ConfigMgr client automatically apply them. We have a hardcoded reference to the patch location, and in addition we also have a hardcoded reference to what patch we are deploying.

Now, remember all these cons as more will be remarked later.

Unsupported method

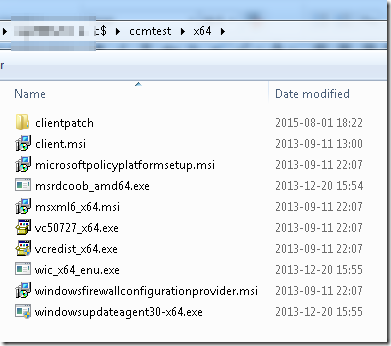

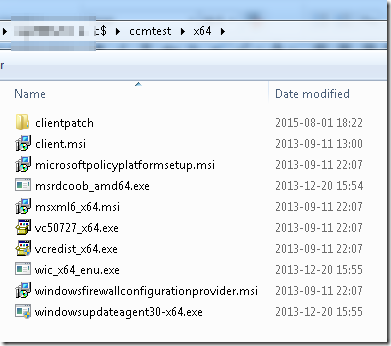

Since SMS 2003 there has been some legacy code that will allow the CCMSetup to detect a specific folder, download any patches located in this folder and then post installation also install the patches.

The folder is called ClientPatch (surprise!) and should be created in each architecture directory.

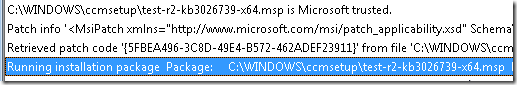

Once the installation is started and detects that this folder is located in the source-folder it is currently using it will download the file

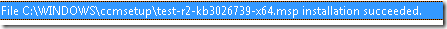

After the installation of client.msi is completed it will continue to install any downloaded MSP-files.

This method is unsupported and there is no testing performed by the ConfigMgr team if any changes they make will potentially cause any future issues. There is a lot of public ‘it works without issues’ and some people saying ‘we have identified issues’.

People outside of Microsoft claims that it´operates without issues.

SCCM 2012 Client Push – Including hotfixes / CU patches in Client Push

System Center 2012 Configuration Manager R2 Automating Client Patch installation during Client Push Install with CU2

ClientPatch folder method for including client updates – supported?

Change in how to apply Patch with SCCM Client push

GOOD INFO: HOW TO AUTOMATICALLY APPLY A CLIENT HOTFIX AS PART OF A CLIENT PUSH OR CLIENT INSTALLATION

Summary

Issues that have been identified relate to specific properties not beeing parsed properly is using this approach as the installation order is to setup the client, and then after the client is installed it will automatically install the patch. As the patch installation must stop all services (think ConfigMgr agent, but also the Task Sequence service) to release any locks to files that it wants to update the loss of properties seems harmless (and yet also a logical side-effect) in comparison.

As you might guess; Stopping the Task Sequence service during Operating System Deployment is a high-risk undertaking.

Maintenance

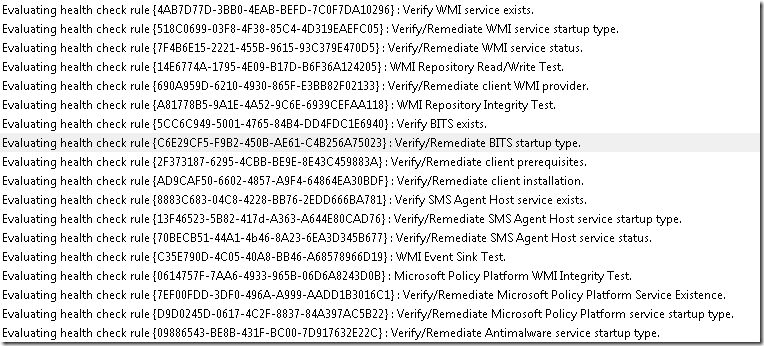

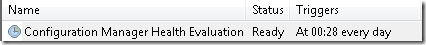

Configuration Manager has introduced several mechanisms to ensure a ConfigMgr client stays healthy. One part of this, which has been remarked early on, is the CCMEval scheduled task.

The CCMEval performs two tasks namely to identify (task one) and remediate (task two) unhealthy clients. The name is a give-away. There is an option to configure it to only perform the first (either through a property at install-time or as a post-configuration task) which of course will only notify you as an administrator through the ConfigMgr console. And by notify we mean that you have to actively dig out the information under Monitoring –> Client Status.

CCMEval’s doings (or undoings) can be identified through the log-file named ccmsetup-ccmeval.log located in C:\Windows\ccmsetup\Logs.

Identify task

Lets run through its doings when performing a health check to identify a unhealthy client

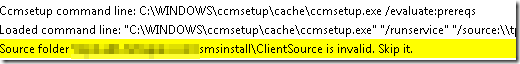

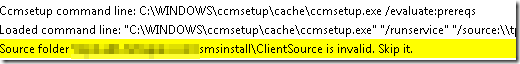

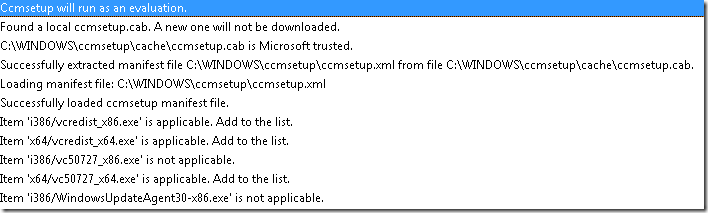

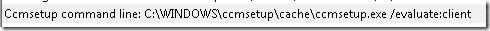

First we can see that it triggers the ccmsetup with the parameter /evaluate and focusing only on prerequisites. Most likely this will identify that all necessary prerequisites are installed, available for installation if they are missing and if not it should trigger an installation of the missing prerequisites.

As you can see it remembers the command-line we used for installing the client. A validation will also happen (just like it does during install-time) that source-folders are validated.

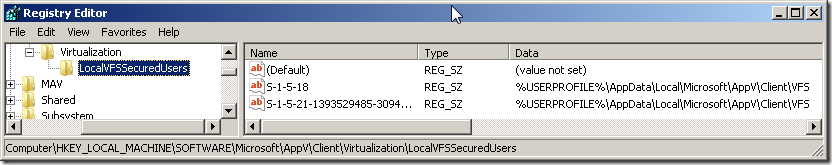

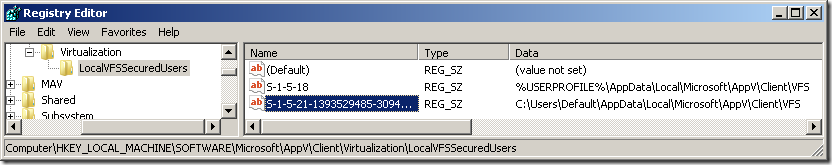

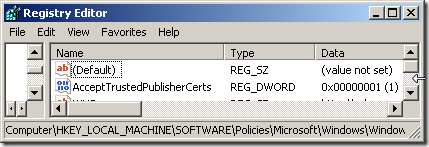

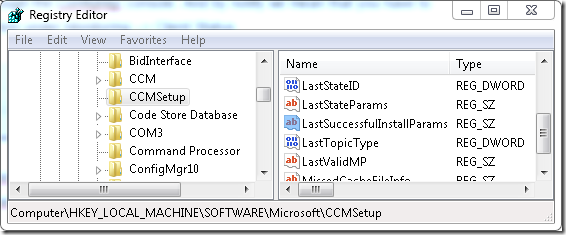

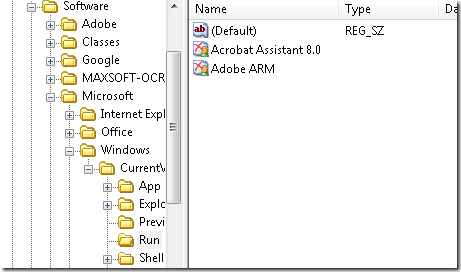

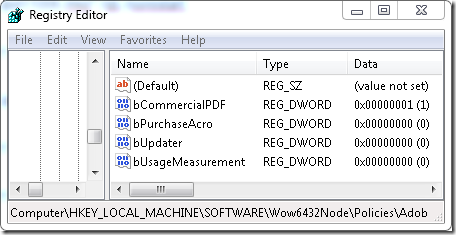

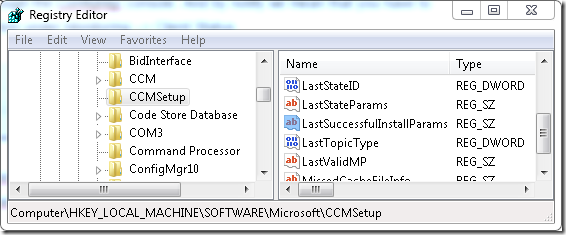

The previously used command-line for installation is saved away in the registry. As far as the investigation goes this little vital component is saved in the below registry-key.

Once it has validated the source the client knows about (remember; working folder, /source and /mp are valid options to specify this during the initial installation) it will start to digest the information that it knowns about the prerequisites. Just as the initial installation it will extract the XML-file (now using the local-copy) from ccmsetup.cab and then proceed to ensure what prerequisites are applicable, available and installed.

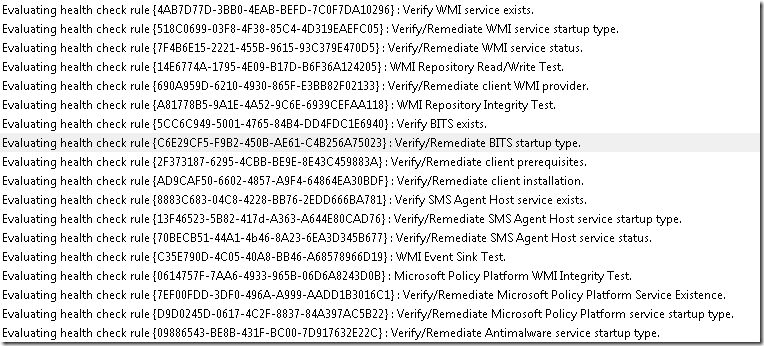

Once the prerequisites had their run there is an secondary run that will validate the client itself

The result of this (and what checks are performed to ensure that a healthy ConfigMgr client is on the machine) is logged in a secondary file located in C:\Windows\CCM\Logs and its named (surprise!) ccmeval.log

Remediate Task

The above portion will only identify the current state of a client and if configured as such – the CCMeval will stop at the above part and inform (notify) the ConfigMgr hierarchy that this is the case. By default it is also enabled to remediate a client which is considered unhealthy.

One of the more harmless remediation is to just start the CCMExec-service. Fairly easy right? Serivce not running? Start it.

One of the more challenging remediation is to reinstall either the prerequisites or even the client in itself.

Wait. What? why would this be a challenge? Lets play out two potential scenarios that are very likely to happen if following some articles that have been posted above.

Scenario #1

You followed the suggestion to ensure that the ConfigMgr 2012 hotfixes are installed during the Task Sequence that deploy the operating system using the package that contains the ConfigMgr Client. A sample PATCH-property would be seen like this:

PATCH="C:\_SMSTaskSequence\OSD\XXX00002\x64\hotfix\KB2905002\Configmgr2012ac-r2-kb2905002-x64.msp"

As this is a temporary location for storing packages only during the Task Sequence execution once a Task Sequence has completed the folder is removed.

If the client were to become faulty due to <insert any reason> a repair would be attempted using the initial command-line as found in the registry (HKLM\Software\Microsoft\CCMSetup) such as this

"/runservice" "/source:\\fileshare\validsource" "SMSCONFIGSOURCE=P" "smssitecode=P01" "FSP=fsp.domain.com" "PATCH="C:\_SMSTaskSequence\OSD\XXX00002\x64\hotfix\KB2905002\Configmgr2012ac-r2-kb2905002-x64.msp" "

However, this installation will fail with a generic error code of 1635 due to the installer being unable to locate the previously temporary folder. The patch is no longer in the above location.

Scenario #2

During a client push scenario we have published the UNC-path where our patch-files are located on a file-share within our environment. This could be on the site-server, a DFS-root or somewhere else.

Sample property

PATCH=\\Siteserver\SMS_CNT\client\x64\hotfix\KB2905002\Configmgr2012ac-r2-kb2905002-x64.msp

There are two potential concerns that may cause harm here if a repair ever were to be triggered. If an admin was haggled to cleanup, save disk space or perform any similar action a year later a scenario will arise where the patch is not available. Perhaps some a new way of distributing the hotfix was discovered and it was decided to cleanup old file-shares. Well, if the above patch was no longer available at the above location all future repairs would halt with a generic 1635 error.

The other concern raised is if the file is still available however the client is constrained by high latency. Perhaps some packet drops. Well, perhaps the connection might be congested with Channel9 video streams. During the patch file-copy part the following error is very likely to happen

MSI: Action 1:44:18: InstallFiles. Copying new files ccmsetup 2015-08-02 01:44:18 8560 (0x2170)

MSI: Internal Error 2902. ixfAssemblyCopy ccmsetup 2015-08-02 01:44:20 8560 (0x2170)

MSI: Action 1:44:21: Rollback. Rolling back action: ccmsetup 2015-08-02 01:44:21 8560 (0x2170)

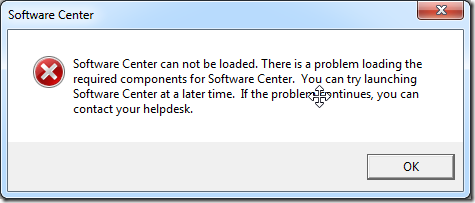

Summary

In the two above scenarios we will end-up with a completely unhealthy ConfigMgr client.

This is why a suggestion has been created to combine the PATCH property method of installing patches and the ClientPatch-concept into a single new way forward of deploying patches.

Go vote!